Capability based JavaScript loading; JS libraries catch up to GWT

Web developers have to walk around dragging a ball and chain. It is fantastic that we have a ridiculous install base (browsers, the Web runtimes) and a dynamic language in JavaScript.

The ball and chain though is the fact that we have to care so much about the payload of the application that we write. Less code means less bytes to download, and less for the JS runtime to load up.

This has bad consequences:

- You are tempted to write hard to understand code

- You have to balance functionality and code size much more than other environments

- You end up getting tricky and come up with ways to dynamically load modules on demand

- All of this time is time not spent on the app logic.

When you run into a problem like this in computer science what do you do? Build an abstraction!

GWT has done a great job here. It’s very nature requires a compilation step, and once you have to deal with that step…. you can do a lot at build time to change the above dynamics.

For example:

- You can write nice explicit source code, and trust that the compiler will output optimized JS (which can look as ugly as sin for all anyone cares)

- Your application can get faster with a new release of GWT, as the compiler gets better

- A lot of advanced techniques such as code splitting, and deferred binding can be applied to allow logic to apply at build time as well as runtime.

Ignoring GWT, when you write a pure JS application for the Web, you are writing a cross platform application. Unlike clean portable C, you don’t have that compilation step to do clean up work for you on each platform, so you end up with a lot of runtime conditional logic.

You try to do object detection rather than user agent conditional, but still, you end up both downloading the code necessary to run in all environments, and you have the overhead of loading and executing that code.

Chances are you are smart and don’t do if() checks all the time, but maybe do so once when loading up. For large chunks of big differences, maybe you do something like:

var Foo = (sometest) ? function() { // do it like this } : function() { // do it like that };

If you take a look at some parts of popular JavaScript frameworks, you see that they are abstractions or fixes for various browsers. As browsers have stepped up to the plate recently, they have fixed a lot, and suddenly you take a step back. A lot of frameworks have a chunk of code to give us the ability to do smart things with CSS querying. Modern browsers support querySelectorAll (even with some bugs) and getElementsByClassName so a lot of the code is un-needed…. unless you care about the old browsers.

We shouldn’t have to download all of that cruft on the other side of the if() statement when we don’t use it!

The GWT compiler can output versions of your application targeted to a given browser. Only the fastest, minimal code, will get sent down. Deferred binding goes far beyond just browser type (can deal w/ locales and much more) too.

GWT isn’t the only horse in this race though.

Alex Russell started to sprinkle in some directives to Dojo that would enable you to do a build that is WebKit targeted. A major use case for all of this is making sure that you are sending down lean code in the mobile space. It can matter everywhere, but when you are dealing with grown up walkie talkies…. you want to really optimize.

And this brings us to YUI. I was really excited to see some of the features in the YUI 3.2.0 preview release. Great stuff for touch/gesture support, but what stood out for me was “YUI’s intrinsic Loader now supports capability-based loading”. A-ha!

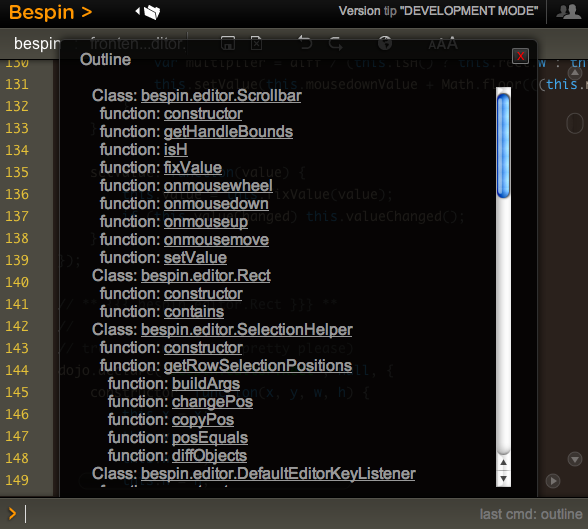

I poked around the source to see how it was used. Here is one fake example that shows how it works. When you boot up YUI you can add in “conditions” which are in charge of working out whether to load something. You can currently tie on to user agent, or write a test function:

YUI({ modules: { lib2: { requires: ['yui'], fullpath: 'js/lib2.js', condition: { trigger: 'node-base', ua: 'gecko' } }, lib3: { requires: ['yui'], fullpath: 'js/lib3.js', condition: { trigger: 'event-base', test: function(Y, req) { return Y.UA.gecko; } } } } }).use('node', function (Y) { .... });

The YUI team uses this themselves to only load certain ugly DOM stuff for IE6 when needed:

"dom-style-ie": { "condition": { "trigger": "dom-style", "ua": "ie" }, "requires": [ "dom-style" ] }, YUI.add('dom-style-ie', function(Y) { // .... });

This makes a ton of sense, especially for the libraries themselves to do this work. They are the ones that do the heavy lifting of cross browser ugliness, all to allow us to write to an API that works.

Beyond that, we can then ourselves split out code if it becomes a performance issue.

Of course, the value of the test() function is that you can do capability based testing (not just user agent testing…. hence the name!). This means that you can do a test say for Canvas support (as Zach mentions in the comments) document.createElement(’canvas’).getContext, and load up excanvas or another shim library if it doesn’t exist.

Using modernizr and ilk, we could build out “plugins” for YUI that auto load based on common capabilities. You can imagine running a YUI build, having it detect that you are using a capability, and then automatically load up a plugin that has the condition all loaded up. What a great user experience as a developer!

Maybe it makes sense to come up with a common pattern and conventions for dealing with this issue. How do you name your CSS/JS? Do we setup server side hooks so we don’t even need loaders necessarily?

I can certainly see a day where you may be asking the Google Ajax Library service for jquery.js, but it is returning jquery.ie.js to you.