Remember when the Object database was going to kill the Relational database?

OOP was the sexy programming model, and relational set theory seemed so quaint. Once you are using Objects, why wouldn’t you just want to persist them instead of having to drop down to this crazy SQL? Inner joins instead of just person.name.first? Fools.

Well, it didn’t quite work out that way of course. Instead we got half way measures such as object-relational systems, and the huge quagmire (as Ted Neward would put it) of the ORM years, which continue to do well.

Why did Object databases fail? If I remember correctly, it feels like there were a couple of problems:

- They were slow at first

- People had a crap load of tools around the relational world.

It was fine to do some simple work, but what about reporting? Where was the Business Objects for the Object database? I remember working with a huge bank that used Versant AND Oracle, and they had a nightmare involving syncing between the two.

Ok, so the Object database failed, so what is the new attack?

The Cloud-y Web

SQL is an enterprise victory that managed to make its way into the consumer Web and application space. A lot of people knew SQL, and it seemed obvious to have a LAMP stack or a Java / .NET stack backed by a RDBMS.

Is this really the right choice for Web applications? Why was Rails so successful? It was due to the productivity gain. How much of that is due to ActiveRecord vs. the other Action* pieces that make up Rails? I would argue a large percentage. Working with the database was actually a big pain in the tuches. ActiveRecord together with migrations helped a lot. It gave us a nice middle man between a full ORM and the SQL that we know and …. know.

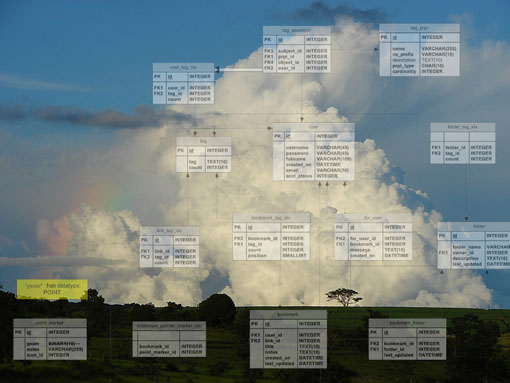

What if the database piece didn’t need to be that painful? The source of the pain can be the paradigm shift between the various worlds, but also a huge part of it is scalability. When you have to scale your website, it can be fairly easy to make your application stateless, and then the bottleneck becomes the poor database. This is when you break out the master / slave relationships, think about partitioning of the application, and caching layers (Tangosol Coherence, memcached). Now you have to really think about an architecture ;)

Google had to do this thinking a long time ago, as they obviously have to scale their applications to a huge degree. Scaling the fairly read-only search operation is one thing, but as soon as you get to read-write operations you have a lot more of a head-ache. Scaling a MMORG astounds me. To be that real-time, and having the world constantly changing. Wow. At least there are the separations of locations (world X can be this cluster of machines).

Now we get to Bigtable, the engine that Google built to scale in the cloud. Amazon has their new SimpleDB, and there are others.

What these guys are all doing, is revisiting the database story. Maybe it is time to think about if a RDBMS is the no-brainer choice.

When Google App Engine launched, I thought there would be a lot of people saying “oh man, I just want MySQL instead of this new thing”. I barely heard that, and instead heard more thoughts along the lines of “It is great to be able to use the scalable database that Google uses internally.” In fact, when you start using it and see that it is schema-less, you get a bit of a relief. You can build your model, and even use an Expando to be highly dynamic on the data in the backend. You go along your way, iterating on your code and model and you don’t have to spend time working on up and down migration methods. Doesn’t that remind you a little of the OODBMS dreams? But this time it is fast and scalable!

Resting on the Couch

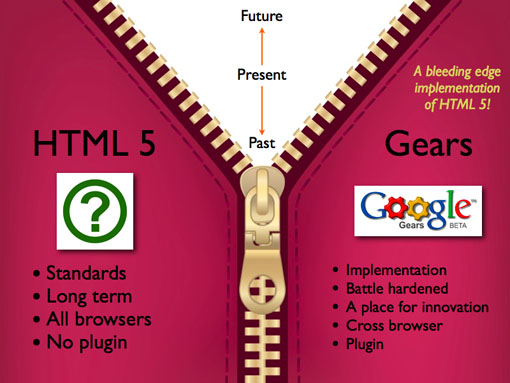

With the interest in Bigtable via App Engine pushing thought, we also have CouchDB pushing from the other end. The end that says, what would a RESTful approach to a database be?

Apache CouchDB is a distributed, fault-tolerant and schema-free document-oriented database accessible via a RESTful HTTP/JSON API.

JSON built in. JavaScript right there. A database built for the Web?

It is great to see new ideas and thought about the storage of data. The RDBMS isn’t going anywhere of course. There are still a ton of tools out there for it and legacy code, and we all know that:

Data stays where it lies.

It is much easier to implement a new application talking to the old datastore, than migrate the datastore itself. It is like taking out the foundation. Also, SQL is getting new life in places too.

SQLite

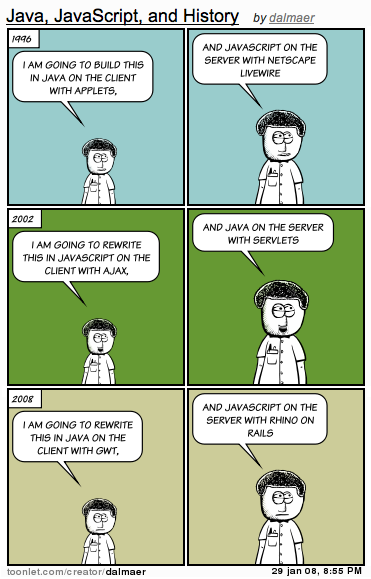

I recently saw an application that used GWT on the client, and JavaScript on the server, which reminded me of my comic above. I wonder if we may end up with another flip, having SQL being used in the client, and other systems like CouchDB, Bigtable, etc being used in the enterprise / on the server.

It is happening on the client. SQLite seems to be everywhere. Your operating system, phone, browser, applications, everywhere. I bet I have around 20 SQLite engines on my system right now, and growing. Why is this happening? Well, instead of coming up with your own data format, parser, and search engine, why not just use SQLite and be done. It is very faster, perfect for single user mode, so everyone is a winner.

So, SQL has a looooong future ahead of it, but it will be interesting to see how the RDBMS weathers the latest storm.

What do you think?