With the iPhone / App Store debacle a lot of people are thinking about distribution these days. As more news comes in, I definitely feel a lot closer to taking the blue pill that is for sure.

I have also overhead several conversations in the last few weeks that conflate open source and the magic of distribution.

Alex (was so tempted to say Dylan here for a second, man! ;) posted about perspective and how not only is all open source not equal but that doesn’t make something on the lower end of the scale intrinsically evil.

I have to keep reminding myself of this. When a company comes along and open sources something I am open initially cynical due to the large usurping of the term “open”. If I feel that said company is using open source as a marketing vehicle only I get defensive. One example may be Flex and Adobe. You could look at the open sourcing of Flex with a wink and say “Erm, the core platform is proprietary, so by open sourcing the pieces on top….. nice work ;)” Or, you could see that Adobe has potentially added value to the Flex community there.

For one, there is huge value in having the darn source. For example, Francisco Tolmasky just said:

No amount of documentation can ever surpass just having the source in front of you (read: thank god webkit is open source)

If you run into a bug in Flex, you have another avenue to hunt it down.

However, that is just one important surface value. Adobe could potentially get so much more out of a more open development process, including the community, etc etc. They could do well to get some more points, although that doesn’t come without a cost to. Dealing with people is hard.

The thing is, the company in question gets to choose how they open source their software. When we jump up and down over the fact they use GPL, or how they don’t listen to us, we have to realize that. That doesn’t mean we have to be quiet, and that we shouldn’t review what their “opening” could mean…. but we can still respect them. I would love to see a podcast that talks about the various forms of “Open”. I think it would open up a lot of eyes to have rigorous discussion on various case studies.

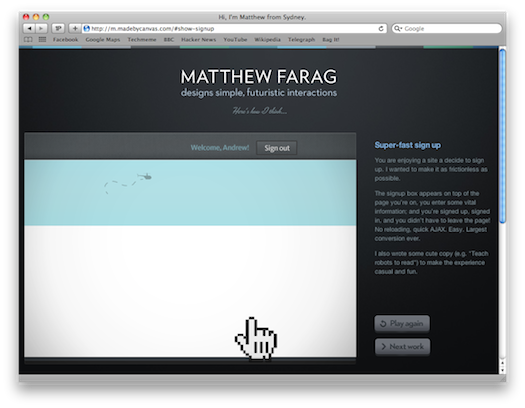

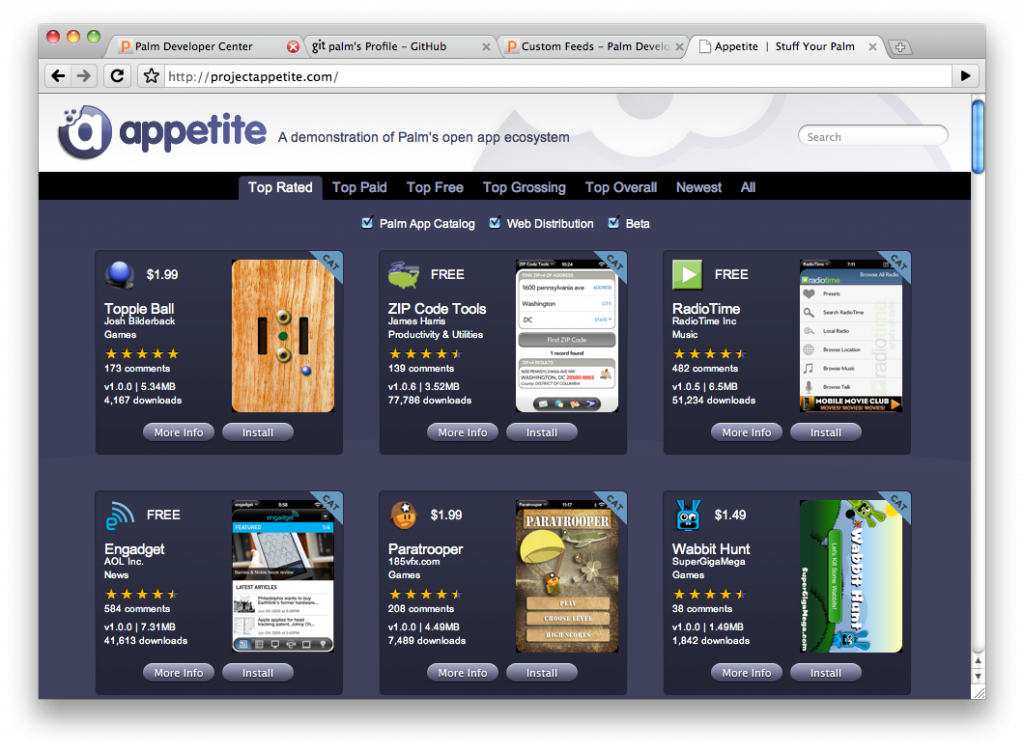

To the distribution part. Open source is fine and dandy, but how do you get your bits out to real users? That is what matters, and that is what is causing the kerfuffle with the App Store. You are in lock-down. It shows us how friggin’ awful the mobile Web is compared to the wired Web. Imaging a world where you put a website up and had to ask ONE company “hey, can you allow people to go to mynewapp.com please?” makes you shiver… but that is the way of the world on far too much of the mobile Web. As locked down as Apple is, the world is still vastly better than the world where the carriers ruled the waves. Making those buggers just in charge and competing on creating the best pipes to the ‘net is something I can’t wait to see.

The Web won majorly due to distribution. As long as you can get someone a URL, you are there. Getting URLs to people keeps getting easier with various viral and communication systems. That Google thing helps connect the docs too.

You don’t have to look too far though to see the closing of the walls in some ways. Facebook, and the like. It seems that they have seen this though and instead of wanting to stay in their ivory tower, they want to bring the tower to all websites via Facebook Connect.

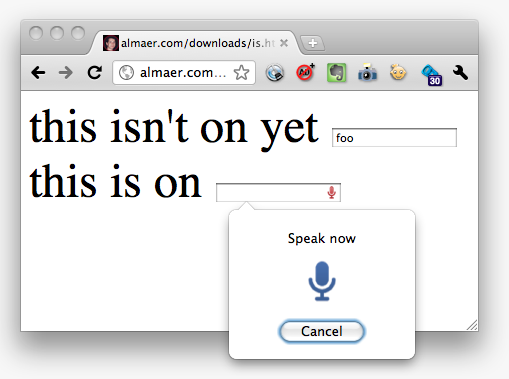

With the lens of distribution you can look at technology such as browser add-ons, Gears, Flash, browsers themselves and see that you need more than open source. It is fantastic that I could take Gecko or WebKit/Chromium and create a browser. That doesn’t mean that anyone will use it. I really like the path that Yahoo! BrowserPlus is on….. not just because they are open sourcing the platform, but because they are working on a strategy to allow people to create their own services. Gears punted on this issue. You could write up a spec and try to persuade the Gears team to put a new Gear into the toolbox, but that would be really hard. For one, it has to be tested and secured on the list of platforms that Gears supports. And, thanks for version 1, but what about maintenance? Tough problems. It still feels like we need to allow developers to “experiment on the edges” and the best platforms allow just that (hence, hoping that Y!BP takes off).

So, maybe we need a 100 point guide to open distribution to go along with the open source one? They definitely overlap. A good open source community driven process will get you some points on the distribution ladder, but you could also easily have an open distribution mechanism on a closed platform.